SMART

PHP

STATISTICS

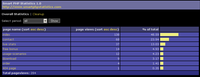

What is Smart Statistics?

Smart Statistics is a PHP script that offers semantical statistics and allows webmasters to monitor easily most visited pages of a site during a period.

This script is very different than regular statistics and logging scripts, both in purpose and in scale. Most statistics scripts and log analyzers offer huge amount of information, but you rarely can find some relevant information in what they provide you, so that you can use it in order to improve your site or in order to know what users are really interested in.

Instead of complex statistics, Smart Statistics looks at your site the same way you look: by pages or by sections. So, the information you get from Smart Statistics are:

- what pages or sections are most visited by your visitors

- how did a page or section popularity evolved over a period

To understand better how you can implement this script for your site, check out the usage scenarios section where we explain some real-life samples and how you could benefit.

How does it work?

Smart Statistics is a single php file you have to include in all the pages you want to monitor. Along with the inclusion of the script you need to call a function and give it parameter the name of the page. If no name is given, the SCRIPT_NAME value of $_SERVER array is used. Sample code to include the script:

include "smart_statistics.php";

ss_log("homepage");

Requirements

This script is build in PHP and stores data in MySQL database.